Till now we’ve seen

Now let’s see some tricks that extend these ideas to Deep neural networks.

Neural Network terminology

From the previous posts, you know that neural networks are good at classification. It does this in four steps

- Calculate the output of the current network.

- Compare the output with the correct output.

- Update weights of the network by Gradient Descent.

- Repeat steps 1-3 until you achieve a good classifier.

Before we proceed further it’s better to know some terminology and notations.

- Training: The whole process shown in the 4 steps above.

- instance: A single data point from the set of data points we’re trying to classify.

- Label: The correct/desired output of an instance.

- Prediction: Calculating the output for a given input using our current set of weights.

Some standard notations

In the activation and MLP post the final output looked something like this $a(Max(0, w_{01}+w_{11}x+w_{21}y)) + b(Max(0, w_{02}+w_{12}x+w_{22}y))+c > 0$. Imagine what a network with hundreds or even thousands of perceptrons would produce. To simplify this, we can stick to a standard notation and abstract away all the cumbersome stuff. Let’s use the following neural network as a reference.

(Note: This notation is adapted from Andrew Ng’s Deep Learning series and tweaked a little.)

Representation of the neural network

Let $L$ be the number of layers. Input is not considered a layer and is often called Layer 0. The hidden layers till the output are numbered from 1 to L. Denote the number of units in the $l^{th}$ layer by $n^{[l]}$. Notations of other units follow.

Input

We use $X/A^{[0]}$ to denote the input. In our network it will be

$$X = A^{[0]} = \begin{bmatrix}

x_1 \\

x_2

\end{bmatrix}$$

Weights and Bias

Consider the weights between input layer and the first hidden layer as shown below

We denote the weights between $i^{th}$ and ${i-1}^{th}$ layer as $W^{[i]}$ and the bias before $i^{th}$ layer as $B^{[i]}$. So in the figure above $W^{[1]}$ and $B^{[1]}$ represent

$$

W^{[1]} = \begin{bmatrix}

w_{11}^{[1]} & w_{21}^{[1]} \\

w_{12}^{[1]} & w_{22}^{[1]}

\end{bmatrix}

$$

$$

B^{[1]} = \begin{bmatrix}

b_1^{[1]} \\

b_2^{[1]}

\end{bmatrix}

$$

Activations

Activations are a little tricky. Because two operations are performed in the a single activation unit. For the $i^{th}$ activation layer the operations are

- $Z^{[i]} = W^{[i]}A^{[i-1]} + B^{[i]}$

- $A^{[i]} = g^{[i]}(Z^{[i]}), \hspace{0.5cm} \text{where g is the activation function.}$

Let’s make this a little more concrete by considering the first activation layer.

$$

Z^{[1]} = W^{[1]}A^{[0]} + B^{[1]}\\

\implies Z^{[1]} = \begin{bmatrix}

w_{11}^{[1]} & w_{21}^{[1]} \\

w_{12}^{[1]} & w_{22}^{[1]}

\end{bmatrix}\begin{bmatrix}

x_1 \\

x_2

\end{bmatrix} + \begin{bmatrix}

b_1^{[1]} \\

b_2^{[1]}

\end{bmatrix}

$$

$$

\text{Assuming the activation is ReLU}\\

A^{[1]} = ReLU(Z^{[1]})\\

\implies A^{[1]} = ReLU\left(\begin{bmatrix}

w_{11}^{[1]} & w_{21}^{[1]} \\

w_{12}^{[1]} & w_{22}^{[1]}

\end{bmatrix}\begin{bmatrix}

x_1 \\

x_2

\end{bmatrix} + \begin{bmatrix}

b_1^{[1]} \\

b_2^{[1]}

\end{bmatrix}\right)\\

\implies A^{[1]} = ReLU\left(\begin{bmatrix}

w_{11}^{[1]}x_1 + w_{21}^{[1]}x_2 + b_1^{[1]} \\

w_{12}^{[1]}x_1 + w_{22}^{[1]}x_2 + b_2^{[1]}

\end{bmatrix}\right)

$$

Output

The output is the last activation layer $A^{[L]}$. It is also denoted by $\hat{Y}$ and calculated by the same logic of the activation layer.

Forward Propagation

The process of forward propagation is to calculate the outputs of each hidden layer. Setting $A^{[0]} = X$, we can start calculating the outputs as follows

$$

Z^{[1]} = W^{[1]}A^{[0]} + B^{[1]},\hspace{0.5cm} A^{[1]} = g^{[1]}(Z^{[1]}) \\

Z^{[2]} = W^{[2]}A^{[1]} + B^{[2]},\hspace{0.5cm} A^{[2]} = g^{[2]}(Z^{[2]}) \\

Z^{[3]} = W^{[3]}A^{[2]} + B^{[3]},\hspace{0.5cm} A^{[3]} = g^{[3]}(Z^{[3]}) \\

. \\

. \\

. \\

Z^{[L]} = W^{[L]}A^{[L-1]} + B^{[L]},\hspace{0.5cm} A^{[L]} = g^{[L]}(Z^{[L]})$$

In a nutshell, given $A^{[l-1]}$ feed forward produces the output $A^{[l]}$. The outputs are calculated in a linear way, hence the name feed forward networks.

- Feed Forward: The process of calculating outputs of each hidden layer for a given input.

Backward Propagation

At this stage we have our predicted labels. It is time for us to update the weights based on our error. To update our weights, we must first look at the gradient with respect to the weights. Use the gradients and move the weights in the direction that decreases our error. In the gradient descent post we took it for granted that our error function is a function of weights. But this is indirectly the case. In practice any error function calculates it’s metric by comparing two things

- the predicted output $\hat{Y}$ and

- the desired output $Y$(label)

So it’s fair to write $E$ is a function of $Y $ and $\hat{Y}$.

Remember that $\hat{Y}$ is dependent on all the weights and biases of the previous layer. So, we can easily show $E$ as a function of every weight layer.

$$

\begin{align}

E(Y, \hat{Y}) &= E(Y, A^{[L]})\\

&= E(Y, g^{[L]}(Z^{[L]})) \\

&= E(Y, g^{[L]}(W^{[L]}A^{[L-1]} + B^{[L]})) \\

&= E(Y, g^{[L]}(W^{[L]}g^{[L-1]}(Z^{[L-1]}) + B^{[L]})) \\

&= E(Y, g^{[L]}(W^{[L]}g^{[L-1]}(W^{[L-1]}A^{[L-2]} + B^{[L-1]}) + B^{[L]})) \\

\end{align}

$$

expanding any further is sheer lunacy. But, you get the point that the error function can be written in terms of each weight layer. Now, we can calculate the gradient with respect to each weight layer.

Chain Rule

For obtaining the partial derivative, we have to differentiate this composite function. This is where the chain rule from calculus can help us. If a variable z depends on variable y, which in turn depends on the variable x then

$$\frac{\partial z}{\partial x} = \frac{\partial z}{\partial y} \frac{\partial y}{\partial x}$$

Using this we can write the derivative of $E$ with respect to $W^{[L]}$ as follows

$$

\begin{align}

\frac{\partial E}{\partial W^{[L]}} &= \frac{\partial E}{\partial A^{[L]}} \frac{\partial A^{[L]}}{\partial W^{[L]}} \\

&= \frac{\partial E}{\partial A^{[L]}} \frac{\partial A^{[L]}}{\partial g^{[L]}} \frac{\partial g^{[L]}}{\partial W^{[L]}}\\

&=\frac{\partial E}{\partial A^{[L]}} \frac{\partial A^{[L]}}{\partial g^{[L]}} \frac{\partial g^{[L]}}{\partial Z^{[L]}} \frac{\partial Z^{[L]}}{\partial W^{[L]}}\\

&=\underbrace{\frac{\partial E}{\partial A^{[L]}} \frac{\partial A^{[L]}}{\partial g^{[L]}} \frac{\partial g^{[L]}}{\partial Z^{[L]}}}_{\frac{\partial E}{\partial Z^{[L]}}} A^{[L-1]}

\end{align}

$$

In a similar fashion

$$

\frac{\partial E}{\partial B^{[L]}} = \frac{\partial E}{\partial A^{[L]}} \frac{\partial A^{[L]}}{\partial g^{[L]}} \frac{\partial g^{[L]}}{\partial Z^{[L]}} \frac{\partial Z^{[L]}}{\partial B^{[L]}}\\

\implies \frac{\partial E}{\partial B^{[L]}} = \frac{\partial E}{\partial A^{[L]}} \frac{\partial A^{[L]}}{\partial g^{[L]}} \frac{\partial g^{[L]}}{\partial Z^{[L]}} = \frac{\partial E}{\partial Z^{[L]}}\\

$$

Cache the previous output

This looks fine for the $L^{th}$ layer but doesn’t it get huge as we approach the derivative with respect to weight layer 1. Let’s see what happens at the ${L-1}^{th}$ derviative.

$$

\begin{align}

\frac{\partial E}{\partial W^{[L-1]}} &= \frac{\partial E}{\partial A^{[L]}} \frac{\partial A^{[L]}}{\partial W^{[L-1]}} \\

&= \frac{\partial E}{\partial A^{[L]}} \frac{\partial A^{[L]}}{\partial g^{[L]}} \frac{\partial g^{[L]}}{\partial W^{[L-1]}}\\

&=\frac{\partial E}{\partial A^{[L]}} \frac{\partial A^{[L]}}{\partial g^{[L]}} \frac{\partial g^{[L]}}{\partial Z^{[L]}} \frac{\partial Z^{[L]}}{\partial W^{[L-1]}}\\

&=\frac{\partial E}{\partial A^{[L]}} \frac{\partial A^{[L]}}{\partial g^{[L]}} \frac{\partial g^{[L]}}{\partial Z^{[L]}} \frac{\partial Z^{[L]}}{\partial W^{[L-1]}} \\

&=\frac{\partial E}{\partial A^{[L]}} \frac{\partial A^{[L]}}{\partial g^{[L]}} \frac{\partial g^{[L]}}{\partial Z^{[L]}} \frac{\partial Z^{[L]}}{\partial A^{[L-1]}} \frac{\partial A^{[L-1]}}{\partial W^{[L-1]}} \\

&=\frac{\partial E}{\partial A^{[L]}} \frac{\partial A^{[L]}}{\partial g^{[L]}} \frac{\partial g^{[L]}}{\partial Z^{[L]}} \frac{\partial Z^{[L]}}{\partial A^{[L-1]}} \frac{\partial A^{[L-1]}}{\partial g^{[L-1]}} \frac{\partial g^{[L-1]}}{\partial W^{[L-1]}}\\

&=\frac{\partial E}{\partial A^{[L]}} \frac{\partial A^{[L]}}{\partial g^{[L]}} \frac{\partial g^{[L]}}{\partial Z^{[L]}} \frac{\partial Z^{[L]}}{\partial A^{[L-1]}} \frac{\partial A^{[L-1]}}{\partial g^{[L-1]}} \frac{\partial g^{[L-1]}}{\partial Z^{[L-1]}} \frac{\partial Z^{[L-1]}}{\partial W^{[L-1]}}\\

&=\underbrace{\frac{\partial E}{\partial A^{[L]}} \frac{\partial A^{[L]}}{\partial g^{[L]}} \frac{\partial g^{[L]}}{\partial Z^{[L]}} \frac{\partial Z^{[L]}}{\partial A^{[L-1]}} \frac{\partial A^{[L-1]}}{\partial g^{[L-1]}} \frac{\partial g^{[L-1]}}{\partial Z^{[L-1]}}}_{\frac{\partial E}{\partial Z^{[L-1]}}} A^{[L-2]}\\

\end{align}

$$

Notice while calculating $\frac{\partial E}{\partial W^{[L-1]}}$ we already had the result of $\frac{\partial E}{\partial A^{[L]}} \frac{\partial A^{[L]}}{\partial g^{[L]}} \frac{\partial g^{[L]}}{\partial Z^{[L]}}$ from computing $\frac{\partial E}{\partial W^{[L]}}$. We can save this calculation from every previous layer and use it in our current computation. So, it won’t be messy when we get down to the last layer. In general going down a layer each time you’ll notice a pattern that

Updating the weights

So we’ve come to the final part of this simple algorithm. Remember that we need to take small steps in the direction decreasing the error. Here $\alpha$, the learning rate does the job. As long as you don’t set the learning rate high, you should be fine. Finding a good learning rate is for another post.

Updating the weights is straightforward

$$W^{[i]} := W^{[i]} - \alpha \frac{\partial E}{\partial W^{[i]}}$$

$$B^{[i]} := B^{[i]} - \alpha \frac{\partial E}{\partial B^{[i]}}$$

- Backward Propagation: The process of calculating each weights’ contribution to the error and updating them accordingly.

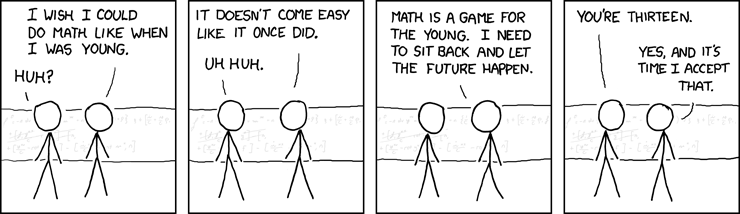

If you don’t get something from the post, relax! Take a step back and rework it again. Despite what you’ve heard, back propagation is as simple idea. Uses basic calculus and linear algebra. Ending this post on a relief note :)