In the last post on overfitting and underfitting. We’ve seen that to achieve a perfect classifier is a tedious task. In this post let’s see some common tricks and methods to control the generalization of a network.

The cost function curve

In general, the overall trend of the training and testing errors is as shown below.

This graph makes sense intuitively,

- In the early stages of training, the network is still trying to fit the training set. While doing this, it’s also gaining some generalization experience. This is reflected by the decrease in the test error. This is the region where the network is under-fit. It still has a lot to learn.

- After a while, you achieve a good generalization. Both of your test and training errors hit a low point, which is good. This means that this is a very good generalization that you can achieve with the current network.

- If we continue the training process, your network is going to over-fit to the training set. This is reflected by a steady increase in the generalization gap.

Early Stopping

Observe the training and testing errors. You can see that the network enters the over-fitting region when the test error starts to rise. That’s when you know you’re losing the generalization capability of your network. So, your basic instinct will be to stop the training process as soon as the testing error starts to rise. This is known as Early Stopping.

In practice, it’s best not to stop the training immediately after the rise in testing error. There will be many fluctuations, but you can see the trend. So, it’s best to leave some headroom before you stop training.

Dropout

You know that the derivative received by each of the weights tells it by how much it should change for the final loss function to decrease. Notice that this is not an isolated operation. The calculation of these gradients happens in a way that it depends on many other neurons. So, a neuron may not only be just correcting for its own mistakes, but also for other neuron’s mistakes. This is a desired feature that gives rise to complex co-adaptions between the neurons. But it also contributes to over-fitting.

To avoid this to a certain extent, we can randomly choose some neurons in a hidden layer and shut them off. This is the idea of Dropout. So, we update our weights in such a way that some of these neurons never existed. The figure from the original dropout paper illustrates this well.

Notice that the dropout is applied only during training. It would make no sense to apply dropout during testing. You might as well test with a new neural network that reflects the old one after dropout. Each of the neurons is dropped-off with a probability that can be set by you. This is called the dropout rate. Selecting a good dropout strategy is for another post.

L1 and L2 Regularization

Another prominent problem with the neural network training is the imbalance between weights. Some weights get too huge and undermine the effects of others. This contributes to overfitting. So the idea is to penalize these weights and keep them under control. Intuitively, we penalize them by adding a function of weights to our final loss. So, our new loss function will look something like

$$L = \text{cross entropy} + \lambda f(W)$$

Here $\lambda$ controls by how much we penalize the weights. If you choose a lambda that’s too high, then your model will underfit. The choice of $f(W)$ can be approached in two ways

- $f(W) = \sum_{j=0}^{L}|W_j|$ - This is known as L1 Regularization AKA Lasso Regression. While using this, we generally end up with sparse vectors. All the small weights tend to go to zero. This is good for feature selection, which we’ll discuss in a later post.

- $f(W) = \sum_{j=0}^{L}W_{j}^{2}$ - This is known as L2 Regularization AKA Ridge Regression. This type of regression tries to maintain the weights homogeneously. Meaning all the weights are balanced well. This is normally better for training models.

Let’s see a concrete example to see what maintaining the weights homogeneously means. With two sets of weights, $(1, 0)$ and $(0.5, 0.5)$ the error calculated by L1 and L2 are as follows

- L1 calculates the errors as follows $f((1, 0)) = |1| + |0| = 1$ and $f((0.5, 0.5)) = |0.5| + |0.5| = 1$

- L2 calculates the errors as follows $f((1, 0)) = 1^2 + 0^2 = 1$ and $f((0.5, 0.5)) = (0.5)^2 + (0.5)^2 = 0.5$

While L1 has no preference over any, L2 tries to balance the weights and chooses the one with lower error i.e $(0.5, 0.5)$

How do we deal with under-fitting

Till now we’ve only seen methods for controlling over-fitting. The trivial solution for under-fitting is to increase your model complexity. You can do this by increasing the number of hidden layers, the number of units in each of these layers, choice of activation functions etc.,

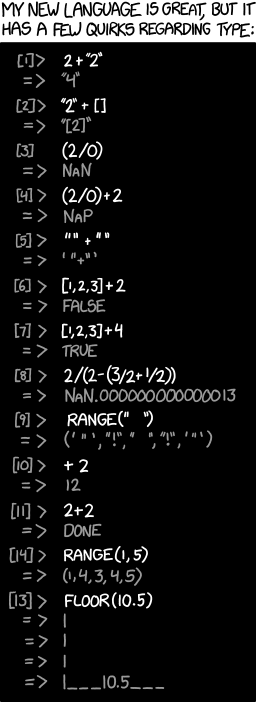

Relax a bit with the post comic.