Average over a window

AdaDelta corrects the decreasing gradient problem caused by AdaGrad. It does this with a simple idea. Instead of summing over all the squared gradients so far, only sum over the last $w$ squared gradients and take the mean of it. Since storing all the previous $w$ squared gradients is inefficient, just take the exponentially decaying average of the squared gradients. The equations would be as follows

$$

\text{let} \frac{\partial E}{\partial W} = g, \text{ At the step t of training}\\

\underbrace{E[g^2]_t}_{\text{average of squared gradient}} = \gamma E[g^2]_{t-1} + (1-\gamma){g_t}^2\\

$$

But we don’t need the squared gradient, only the gradient. So apply square root. Which gives you nothing but the Root Mean Squared (RMS)} result.

$$

RMS[g]_t = \sqrt{E[g^2]_t + \epsilon}\\

\text{Here} \epsilon \text{ does the same job as in AdaGrad. The update in the step t is}\\

update_t = \frac{-\eta}{RMS[g]_t}g_t

$$

Correct for units

When applying the update to the weights, the updates should be of the same units as of the weights. The units here are hypothetical. So, the following idea is based on the assumption that if the weights had a unit, the update should have the same. Maintaining the integrity of the units is not done in SGD, momentum or AdaGrad. AdaDelta corrects this by adding another exponentially decaying average of $\Delta W$and taking the RMS of it. (As to why this works, you need to understand Newton’s method. If you do, then read this section in the original paper. Else, move on without any worries.)

$$

E[\Delta W^2]_t = \gamma E[\Delta W^2]_{t-1} + (1 - \gamma) \Delta W^2 \\

RMS[\Delta W]_t = \sqrt{E[\Delta W^2]_t + \epsilon}

$$

As we don’t know $RMS[\Delta W]_t$ at the time step $t$, approximate it with the RMS of the weight updates till the last step, which is $RMS[\Delta W]_{t-1}$. So our final AdaDelta update is obtained by replacing the learning rate with the $RMS[\Delta W]_{t-1}$.

$$

\Delta W = -\frac{RMS[\Delta W]_{t-1}}{RMS[g]_t}g_t\\

W \leftarrow W + \Delta W

$$

Advantages over Momentum, Nesterov and AdaGrad

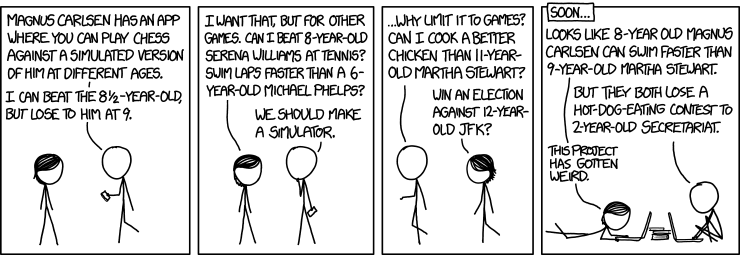

Notice that there is no need to set a learning parameter in the AdaDelta method. This is the main advantage over all the others we’ve seen so far. Let’s see a similar algorithm (RMSProp) which tries to correct the gradient explosion problem of AdaGrad. Enjoy the end of the post comic.