The XOR problem

In the previous post you’ve seen how a perceptron works. Now let’s dive into some more interesting problems in deep learning. What follows is the classic XOR problem. Develop a method for the correct classification of the following points.

Multi Layer Perceptron

It is clear that these points are linear inseparable. So, a perceptron fails at classifying these points. To classify these points it is clear that we need a non-linear boundary. If one perceptron can’t do the job, let’s try combining many of them. To keep it simple and the weights manageable, let’s use the architecture below.

(Note: Every layer stacked between the input and output layer is a hidden layer. We’ll see more about it later in this post.)

But this doesn’t work. Because, the whole output of the last unit is a linear function in $x$ and $y$. We can write the output of $h_3$ as

$h_3 = Ax + By + C$ where

$A = aw_{11} + bw_{12}$

$B = aw_{12} + bw_{22}$

$C = aw_{01} + bw_{02} + c$

Activation Function

This is where an activation function comes to play. An activation function is a non-linear function applied to the end result of a perceptron. This introduces non-linearity to the perceptron and to the network.

Some common activation functions are

ReLU (Rectified Linear Unit)

$$y = max(0, x)$$

Sigmoid

$$y =\frac{1}{1 + e^{-x}}$$

Tanh

$$y = \frac{ e^x - e^{-x}}{ e^x + e^{-x} }$$

Ok, so let’s apply “ReLU” to a perceptron and see what the response is. You can fiddle around with ReLU and become more familiar with it’s response here

Multi Layer Perceptron Activated

Now let’s try activating the perceptrons in our network with relu. Our previous network will now be

Note: The final unit isn’t activated by relu, because we only need it to do binary classification. So we use a Heaviside step function instead. The weights $w_{01}, w_{02}, c$ are biases and are added implicitly and are not shown in the graph.

Now let’s fiddle around with the parameters we mentioned in the formulas above. Try to come up with a set of weights ($w_{01}, w_{11}, w_{21}, w_{02}, w_{12}, w_{22}, a, b, c$), so that you create a region that sepearates these points. If the graph below is too small you can work on it here.

MLP Terminology

Hope you got an intuitive sense of how it works. Before we go further, let’s clear up some terminology. An MLP is a class of feed-forward neural network which we’ll know more about in a later post. In a feed-forward network

Hidden Layer: Any layer that doesn’t directly provide input or output is a hidden layer. The outputs and inputs of these layers are not directly visible or not a point of interest. Thus the name, hidden layer.

Depth of a neural network: The depth of a neural network is the number of hidden layers plus one. Plus one because output weights can be modified. So by increasing the number of hidden layers, you’re increasing the depth of a neural network.

Width of a hidden layer: The number of units in a hidden layer is also known as the width of the layer.

The search for parameters

In the example above you came up with the set of weights that classify the XOR points. But, it’s not practical to search for a set of a weights, especially when we stack many more perceptrons. To understand why a naive search for a best set of weights doesn’t work, let’s consider a simple scenario. Let’s say we have a fully connected network(every node in a layer is connected to every node in the next layer.) and we have 3 hidden layers each with a 100 nodes. Input nodes are 2 and the output is a single node. So the number of parameters will be

- between input and hidden layer 1: 2 x 100

- between hidden layer 1 and hidden layer 2: 100 x 100

- between hidden layer 2 and hidden layer 3: 100 x 100

- between hidden layer 3 and output: 100 x 1

So, we end up with a total of 200 + 10000 + 10000 + 100 = 20,300 parameters. But those are just the number of parameters. Each parameter can have any arbitrary weight. But for the sake of a concrete example, let’s say we bound the weights between -100 and 100 with a step size of 0.01. So the number of possible values for each parameter is $(100 - (-100))/0.01$ = $20,000$. To find an ideal set of weights through naive search, you must search for one instance in 20,300 * 20,000 = 406,000,000. Searching naively through 406 million instances for a simple network is inefficient and unnecessary. This is where Stochastic Gradient Descent can make the search faster towards the goal. We’ll learn all about it in the next post.

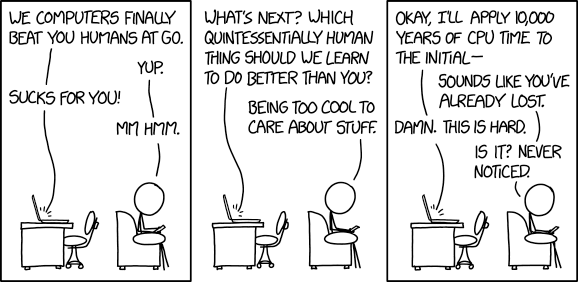

Don’t forget your dopamine shot for making it till the end.