Just to recap what we’ve seen so far,

- Part 1: Neuron and the perceptron algorithm

- Part 2: Multilayer Perceptrons and Activation Function

- Part 3: Cost Function and Gradient Descent

- Part 4: Forward and Backward Propagation

- Part 5: Loss function and Cross-entropy

By this point, we know how a feed-forward neural network works.

- Take in some input and calculate the output

- Calculate the error

- Calculate each weight’s contribution to the error.

- Update weights such that the error decreases.

- Repeat until the desired classification is achieved.

This is it. But it looks too good to work. In fact, you’re right. Following just these steps above will land you in a lot of trouble in practice. Although it should work, the sheer volume of the data we have presents us many challenges. From this post forward, we’ll be looking at these problems one after the other.

Training and Testing

Till this post, we’ve only used the classifier to classify the points we give it while training. But, our goal often is to use our trained network to predict the outputs of instances which don’t have a label. This makes sense, because if we have classified points, why would we classify them again. The part where we predict the outputs of the new inputs is called testing.

Our goal now is to not only do good on the training data but also on the testing data. For example, let’s say you’ve trained a Dog breed classifier. Let’s say your training data had a million pictures of dogs and you achieved a 100% accuracy on the training set. All that would be useless if it doesn’t correctly classify the pictures of dogs you took on the sidewalk. Though this is very unlikely.

So, we train our neural network on the training set and expect it do well on the testing data. Here you assume that a data generating function($f$) has created your training and testing data. It is this function that our network tries to mimic. By adjusting our weights we’re trying to identify the data generating function $f$. Thus learning the characteristics of training data usually tells us something about the testing set as well. In practice, we can rarely identify the true data generating function. Because it is generated by so many natural random processes. We can only estimate it to a certain extent. This is called Generalization. When we generalize well, we perform well on both the training and testing sets.

Expressive power / Capacity

Can a neural network with one hidden layer and only two nodes in the hidden layer classify a set of points such as these

You can play with it here if you want to try it out. Change the learning rate and activation functions as you like. Finally, press the play button to train the network and see how well it fits. You just can’t do it perfectly, unless you design a new activation. This is where we can talk about the expressive power/capacity of a neural network. It is the ability of a neural network to mimic the underlying data generator function. In other words, how well can it generalize over the given data.

Under-fitting

When a network has a low capacity, it struggles to generalize well. This results in a highly biased network. What does it mean for a network to be biased? It means that the network has learned very few rules to classify the data. For example, let’s say we’re trying to classify a set of cars. Let’s say our training set has 3 different cars, a cat, a dog and a bike. Our testing set contains two cars, a bike, and a chicken. We want our classifier to do something like this

As you can see a good classifier identifies cars and not cars. A low capacity network, which is highly biased is said to be under-fit. It learns something like the following

So it has only learned to distinguish between vehicles and not vehicles, because of it’s less capacity.

Over-fitting

In contrast to under-fitting, when a model has more capacity, there is a chance that it can over-fit. when overfitting your network fits too good to the training set and doesn’t generalize well. It results in a high-variance network. It means that your network learns rules that are too specific to the training data.

Here it has learned the rule to classify red, blue and yellow cars. Because of its high capacity and maybe due to overtraining.

Note: This version of underfitting and overfitting is just for illustrative purposes. In practice, you cannot really know what rules the network has learned. We’re dealing with very high dimensional data. Our understanding of it is very limited and is an active area of research.

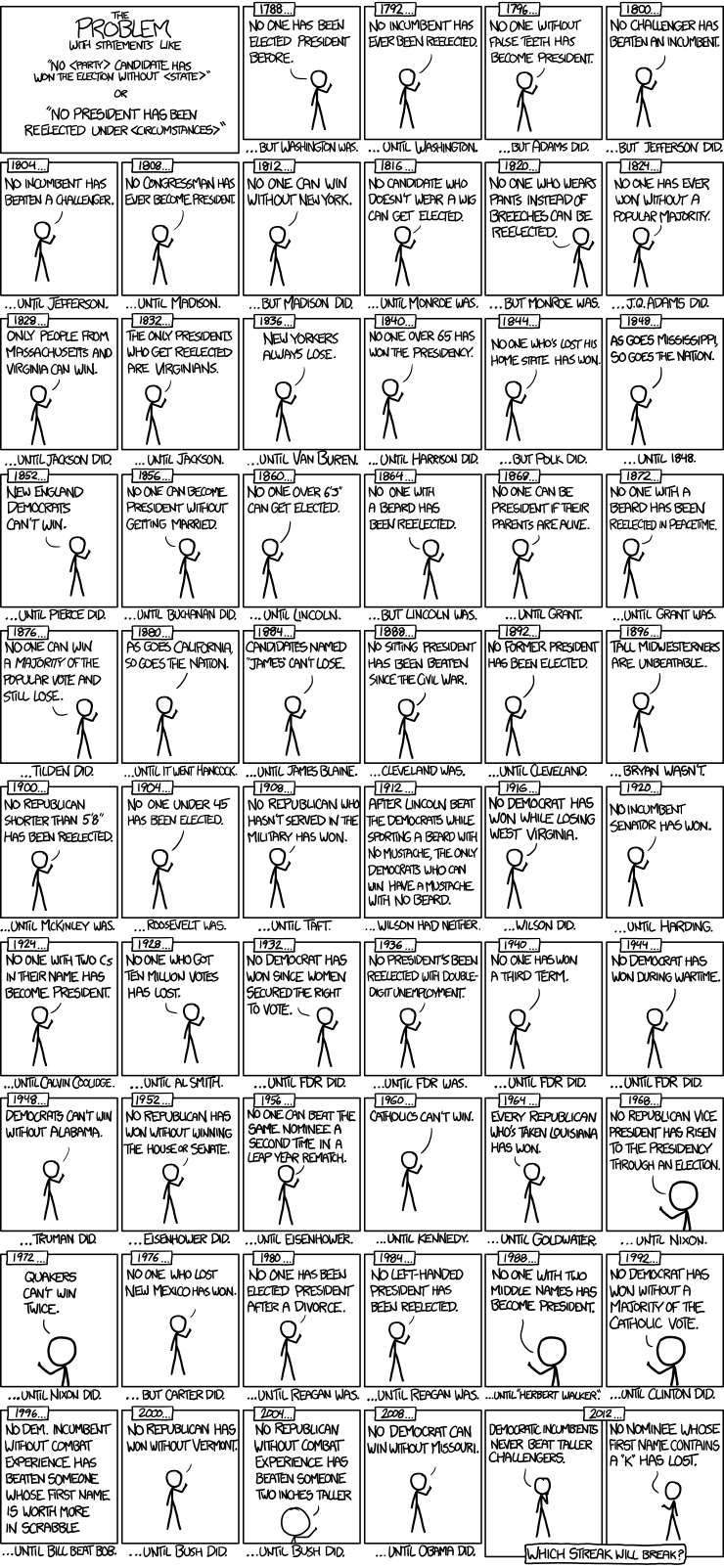

Let’s see some techniques for overcoming these problems in the next post. Enjoy your comic for now :)

Icon Credits: Icons by freepik