Gradient Descent promises us to take a step closer to a minima (local or global) on each iteration. But due to the high dimensionality, it’s dealing with a very complicated error surface. This introduces a problem of very slow convergence towards the minima. Let’s see a simple yet effective technique to overcome this problem.

The problem with Gradient Descent

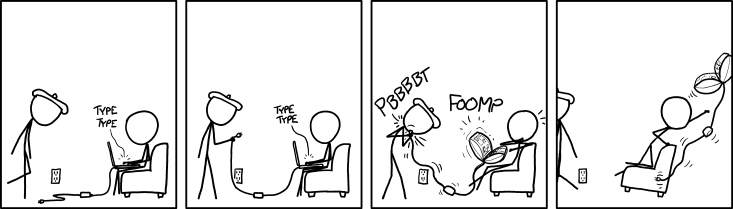

Updates of gradient descent around ravines are similar to the ones shown in the figure below.

The error oscillates so much in an unwanted direction and makes little progress towards the minima. So to overcome these problems, all we’ve to do is decrease the motion in the unwanted direction and increase the motion towards the minima.

Momentum

To do this, we can use the updates that are done so far. Think of each update as a vector. Let’s see the result of adding the previous updates and the update in the current stage.

The resulting vector after adding the previous updates, not only shortens the oscillation but is much better directed towards the minima. This is due to the oscillations canceling out each other in the unwanted direction and adding up in the direction towards the minima. This is the idea of Momentum.

Updates of Gradient Descent

To apply this idea to our gradient descent equations, we need to be storing the sum of the gradients. Let’s call this variable $V$. We now need to update our weights with $V$ rather than $\frac{\partial E}{\partial W}$. Also, we need to be updating V on every iteration to store the sum of the updates so far. So the updated equations for our gradient descent will be

$$

w \leftarrow w - \alpha V \\

V \leftarrow \beta V + (1-\beta)\frac{\partial E}{\partial W}\\

$$

You might be thinking where did the $\beta$ come from? If you think about it, each update vector introduces an unnecessary error in the wrong direction. So it makes sense to take a small part of our current update to minimize the error it makes and add in a large part of the previous sum which is much better in the direction towards the minima. That is what $\beta$ is doing in the equation. It takes a small part of the large error and big part of the small error. The figure below illustrates this fact.

More advanced updates

The momentum update we’ve seen so far is a very simple one. We’ll be looking at many other more good ones in the mini-series on adaptive learning rate algorithms. In this mini-series, we’ll cover one algorithm at a time with an intuitive way of understanding each. Till then, review all our posts so far in the Deep Learning Fundamentals. Don’t forget your comic of this post.