Disclaimer

Until the last post, we’ve discussed the basic theory of neural networks. Let’s start with some practice from this post on. You might have seen titles of this form “create your own deep learning thingy in less than 20 or so lines of code”. While the title actually does what it says, under those 20 lines of code are 100s or even 1000s of lines abstracted away for convenience. Starting with this post, you’ll learn to code up a mini deep learning library on your own. As I’ve mentioned earlier, this is not to re-invent the wheel or bring about a new deep learning library to the pool, it’s just to enhance your own understanding of what’s going on under the hood of a deep learning library. We’ll be using python and the numpy module.

neural networks and matrices

All of the representations and operations involved in neural networks required matrices. You can have a quick recap of the representations here. This is beneficial when implementing neural networks and the algorithms (forward prop, backward prop, …). Assume when not dealing in a matrix form, you have to take care of every individual weight and all of its operations individually, which becomes unreasonable beyond a certain point. Also, matrix operations have a special advantage when your CPU/GPU is processing them.

Optimizing matrix operations

Matrix operations take advantage of vectorization, which is a method for achieving parallelism on a single processor core. Vectorizing is done by special instructions called SIMD (Single Instruction, Multiple Data) operations. SIMD instructions operate on several pieces of data in parallel. For example consider adding two arrays a, b of equal size element-wise and storing it in c. Normally you would do something like this

c = []

for i in range(0, len(a)):

c += [a[i] + b[i],]

But when SIMD operations are supported, this whole thing becomes

c = a + b

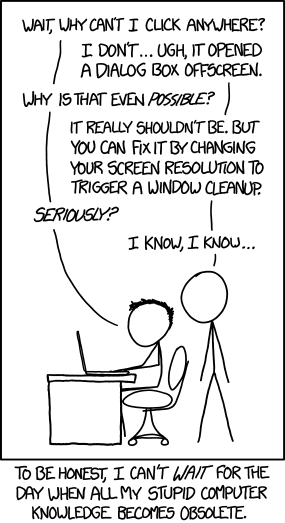

Numpy supports SIMD operations implicitly. We’ll take advantage of this fact and start building our mini Deep Learning library from the next post. Enjoy the end of the post comic.