A quick tweak to Adam

AdaMax is a variant of Adam. It uses an infinity norm to normalize $\hat{m_t}$ instead of the $L^2$ norm of individual current and past gradients used in Adam. So the new steps for updating weights is as follows

$$

\text{let} \frac{\partial E}{\partial W} = g_t, \text{ At the step t of training}\\

\begin{align}

m_t &\leftarrow \beta_1 m_{t-1} + (1 - \beta_1)g_t\\

u_t &\leftarrow \text{max}(\beta_2 u_{t-1}, |g_t|) \\

\hat{m_t} &\leftarrow \dfrac{m_t}{1 - \beta_1^t}\\

W &\leftarrow W - \dfrac{\eta m_t}{u_t + \epsilon}\\

\end{align}

$$

As $u_t$ relies on the max operation, bias correction is not done for this term alone.

Recommended Values

The authors of the paper (see Algorithm 1) recommend the following default values

$$

\begin{align}

\eta &= 0.002\\

\beta_{1} &= 0.9\\

\beta_{2} &= 0.999\\

\end{align}

$$

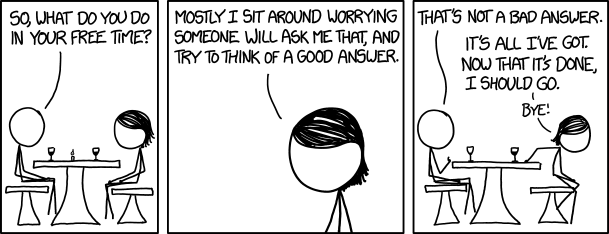

In the next post let’s see Nadam, which combines Nesterov Accelerated Gradient and Adam. Enjoy your end of the post comic.